Table of contents

What is Human?

Humans are unique, with one trait standing out the most: our reasoning ability. The earth is approximately 4.54 billion years old, but modern humans have only been around for just the last 200,000 years, which is quite insignificant in duration. Nevertheless, we’ve evolved to be the technologically advanced species, that we are today, in that span. What’s so unique about us? Let’s find out...

Depth of Consciousness

Neuroscience is a multidisciplinary field that combines physiology, anatomy, molecular biology, developmental biology, cytology, physics, computer science, chemistry and mathematical modeling to understand the fundamental and emergent properties of neurons, glia and neural circuits.

It is an ever evolving field. The more researchers get to discover, the more their conviction on the complexity of the human neurological system, leading to varied opinions from experts on its vastness.

Let's try to understand the uniqueness of the brain. For the sake of this write-up, 5 simple comparisons would be made with computing devices:

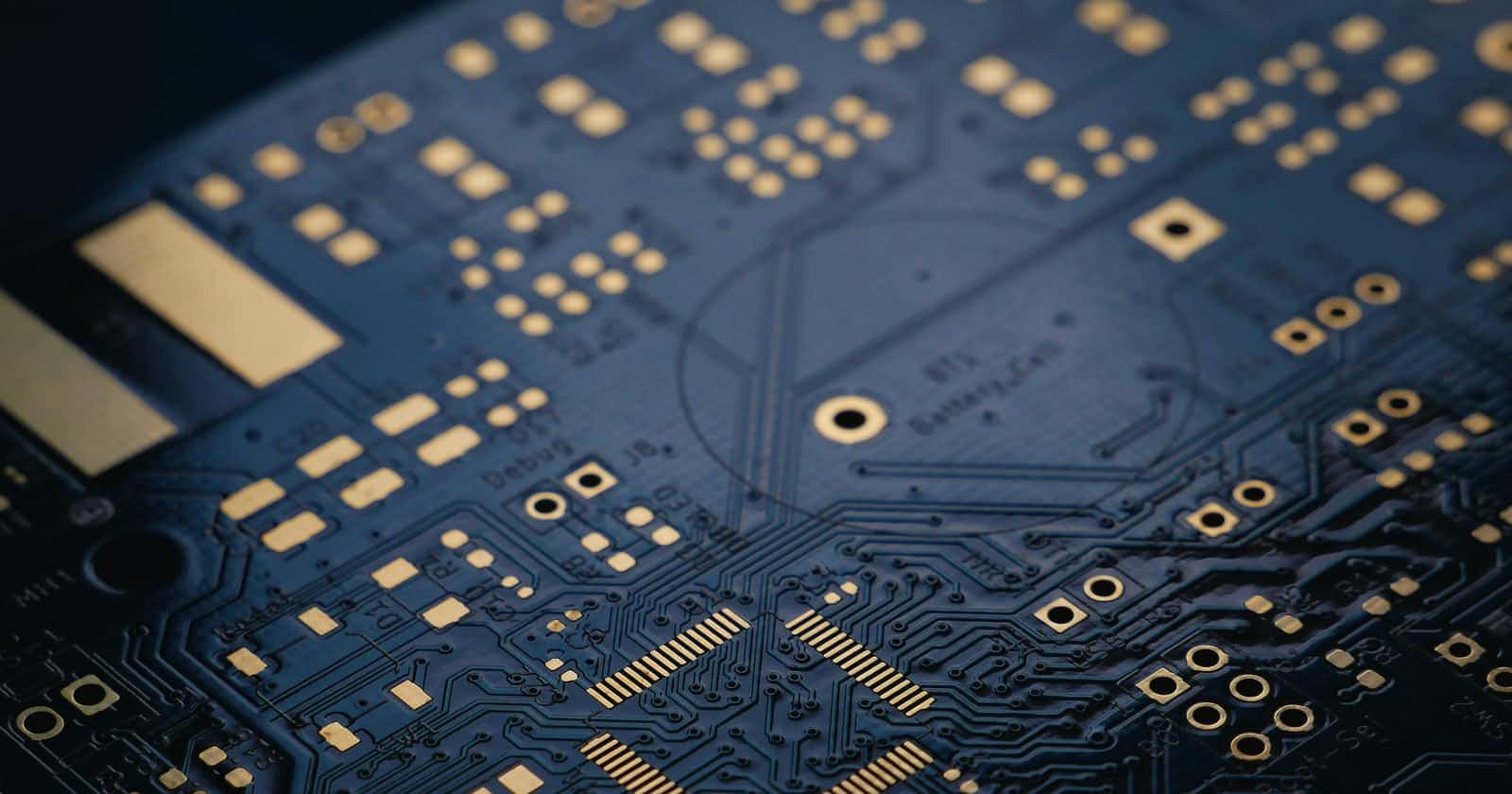

The brain is made up of primary functional units, which are cells called neurons along with their supporting tissue, this can be compared to the circuits of a CPU and its supporting hardware.

The brain is a power house of complex activities: despite constituting just 2% of the body's weight, the brain consumes roughly 20% of the total oxygen absorbed by the body, when we are at rest, and more so in a state of high metabolic need. The computer's CPU and GPU usually require the most power for its operation.

Like computer circuits that are operated in binary (1/0), neurons are also thought of to be binary in their operation; they are either "firing" or not. And yes, just like in the computer, electrical signals are also involved in the transmission of information in the brain., albeit via a different mechanism.

Efficiency of our cerebral functions, can be attributed usually to the parallel relay of information, which ensures that groups of neurons involved in similar functions receive and transmit electrical impulses simultaneously, as specialized sub-units within the brain, somewhat similar to the functional compartmental nature of cores in a processor.

In deep learning (a specialised subset of Artificial Intelligence), an artificial neural node mimics the biological neuron while a layer of nodes mimics the neurological sub-units

The goal of deep learning so far, has been to mimic the human brain in terms of its structure and function, in order to help discern patterns in data. It's a technique that hopes to make outstanding predictions from data by studying data as the human mind would.

When you try to think about deep learning, think about it in terms of functionalities you've seen on computing devices, that seem to perform at human-like intelligence.

Few examples of such are:

- Image Augmentation - Demo

- Face recognition

- Speech Recognition

- Speech Augmentation - Demo

- Digital assistants

- Self-driving cars

- Image-to-Text technology (i.e. Google Lens)

- Text-to-Image technology (Generating visual interpretations of written sentences)(Demo)

- Drug Discovery

- Extra Demos: Microsoft, Nvidia, Expo1, Expo2

The ideas and methods, implemented in deep learning aren't exactly new. Humans have simply researched how the human brain learned to understand things: by making credible associations between information received and subsequent outcomes gotten. We've simply been trying to mimic this functionality, artificially.

Building systems in place for such predictive endeavours had always been an ongoing challenge, but we didn't even have enough digital data to learn from. Then came the exponential growth of technology especially in this 21st century, which has led to a gigantic and ever expanding volume of data, and subsequently, done good to avail the much needed high performance hardwares, such as high performing GPUs, TPUs, CPUs, RAMs, etc. to handle more tasking objectives, at a better precision. As a result, we've had the resurgence of deep learning.

Invariably this development, has increased the frequency of debates on the general outlook of Artificial Intelligence (A.I.), as well as the idea of Artificial Intelligence taking over human jobs, with a notable example being healthcare.

Medical experts do not see a take over happening, but rather, more of A.I. augmenting work in healthcare as it is a field saturated with complex protocols and procedures, and if such a take over is ever to be, then it’s likely years away. Although, as human beings we've learnt to never underestimate the growth and trajectory of human technology.

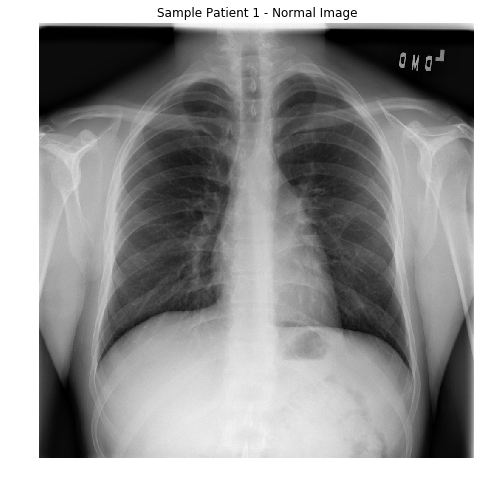

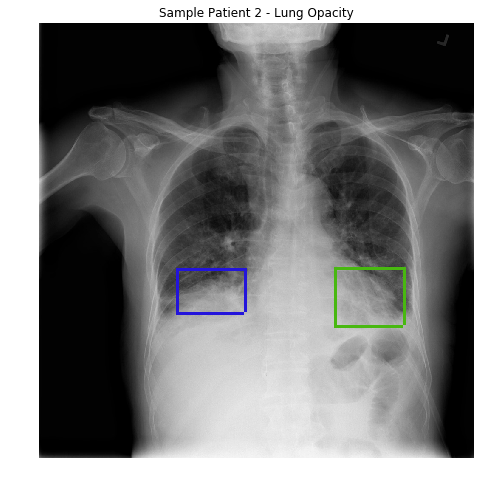

Right now, studies in Diagnostic Radiology, Cancer Research and Dentistry are being undertaken by top medical institutes with funding supplied by top organizations (i.e. Google, Meta, Nividia, etc.), with Centres of learning being established and dedicated, to the study of the applications of Artificial Intelligence in healthcare, as well as the ethical issues its use might spawn.

Healthcare is peculiar. Technical skills aside, empathy, which is a key concept around human care, has not been replaced by A.I. and so far as human beings are still the patients, showing empathy would go a long way in acceptance of care, and handling such objective and subjective outcomes, is what makes every patient interaction unique. One must admit though, that simple repetitive tasks can and would be easily taken over by A.I., as humans cannot under any natural circumstance rival their computing power and speed, which is why popular debates should never have included a Tech. Vs. Human premise.

It would always help, to imagine what healthcare teams would be capable of, if their creativity, associative reasoning, complex pattern recognition, and problem-solving skills, were combined with the tremendous computing power, extraordinary speed, and processing efficiency available to computing devices. We'd be simply augmenting our intelligence as humans, and such augmentations are needed to broaden access to healthcare, especially in regions of the world with grossly diminished medical man-power.

The Average human is ahead of A.I. systems in predictive analysis, as we have a key sense of association. The advantage with computers is that, with every predictive task they are successfully trained to solve, they would easily outperform any human just by sheer computing speed. Your brain is 10 million times slower than a computer.

Conclusion

The race for the COVID-19 vaccine showed clearly, the value of state-of-the-art research. The potentials in placing oneself at the frontline of innovation, cannot be overstated, and humans who think further and do further, would achieve farther as our global society evolves.

A.I. is here to stay, despite the general outlook of its potential not being fully known. I strongly believe it is going to be a driving force for much greater global development, in coming years.

Glossary

Computer vision: is a field of artificial intelligence that trains computers to interpret and understand the visual world

Computing: the use or operation of computers.

CPU (Central Processing Unit): is the electronic circuitry that is involved in executing the instructions of a computer program

Deep Learning : a type of machine learning based on artificial neural networks in which multiple layers of processing are used to extract progressively higher level features from data.

Diagnostic radiology : refers to the field of medicine that uses non-invasive imaging scans to diagnose a patient.

GPU (Graphics Processing Unit): it’s the part of the PC responsible for the on-screen images you see.

Neurological: relating to the anatomy, functions, and organic component of nerves and the nervous system.

Neuroscience: describes the multi-disciplinary scientific study of the nervous system

Node: a computational unit in a neural network which mimics a biologic neuron, by handling information through computational logic, giving a desired output

State-of-the-art: very modern and using the most recent ideas and methods

Specialty: a pursuit, area of study, or skill to which someone has devoted much time and effort and in which they are expert.